Manual Testing Workflow

Introduction

Accessibility audits help ensure all users can access a site’s content and functionality. Automated testing only finds about 20–30% of issues, so manual testing is essential for WCAG compliance.

Automated scans catch obvious code-level problems, but context-specific issues often require manual testing, such as:

- Keyboard navigation

- Screen reader compatibility

- Meaningful alt text

- Clear visual focus indicators

Together, automated and manual testing provide a complete picture of the site’s accessibility status.

Performing a Manual Audit

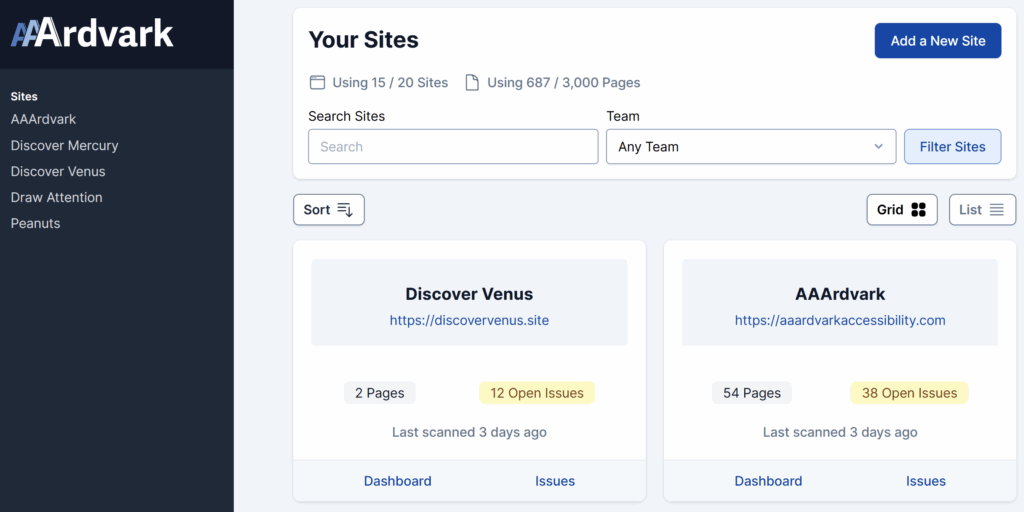

Start a manual audit in AAArdvark by opening the site in the Workspace dashboard.

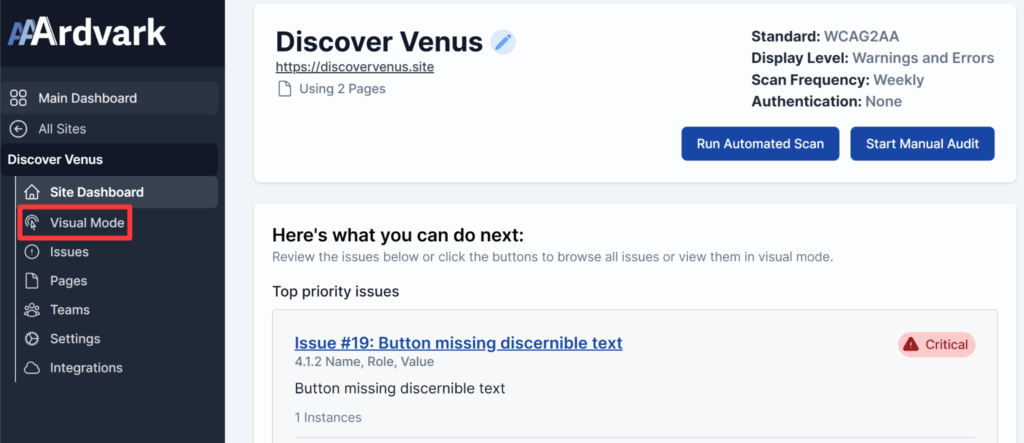

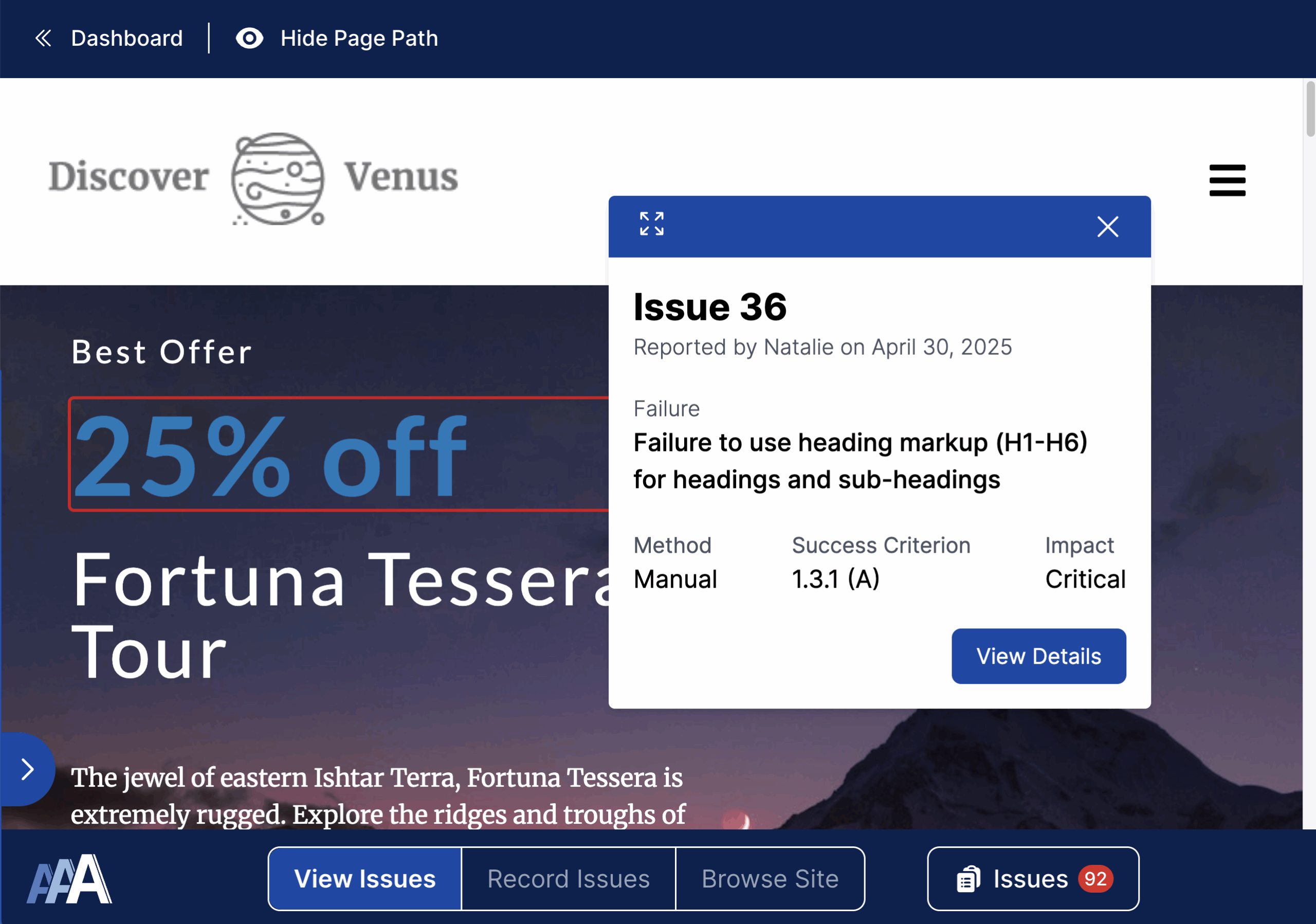

From the Site Dashboard, use Visual Mode to log manual issues after setup, including adding pages and initial scans, is complete.

Learn more about using Visual Mode.

In Visual Mode, Accessibility Testers can:

- Record issues for Developers to address

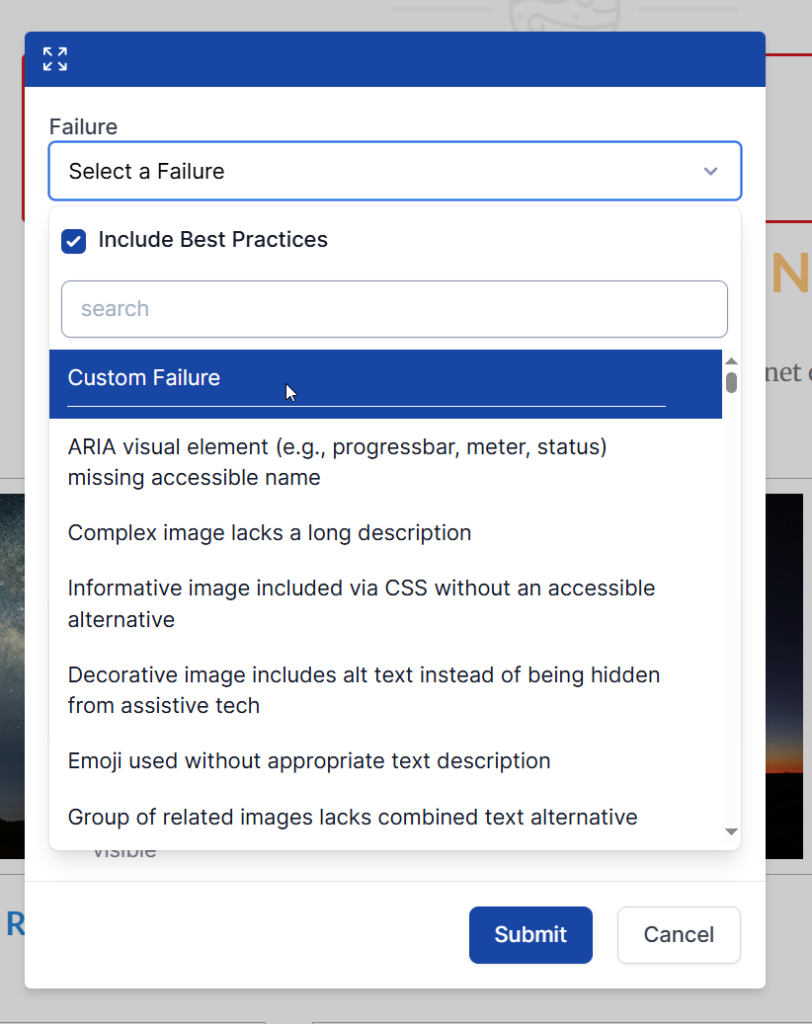

- Select from a pre-set list of failures, already mapped to Severity, WCAG Success Criteria, and possible Solutions.

- Create a Custom Failure by choosing “Custom Failure” in the dropdown

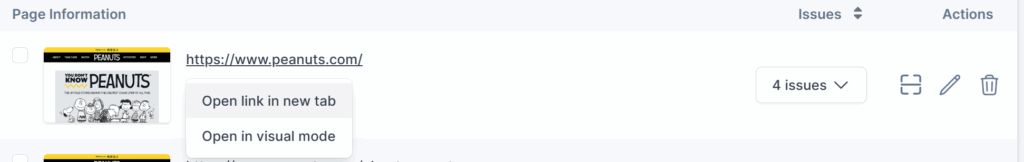

The Pages tab lists all pages needing review. Testers can click a page title to open it in Visual Mode or in a new tab and begin testing.

A helpful tip: mark completed pages in the title with a note or ✅ emoji to track progress.

Reviewing Fixes for Manual Issues

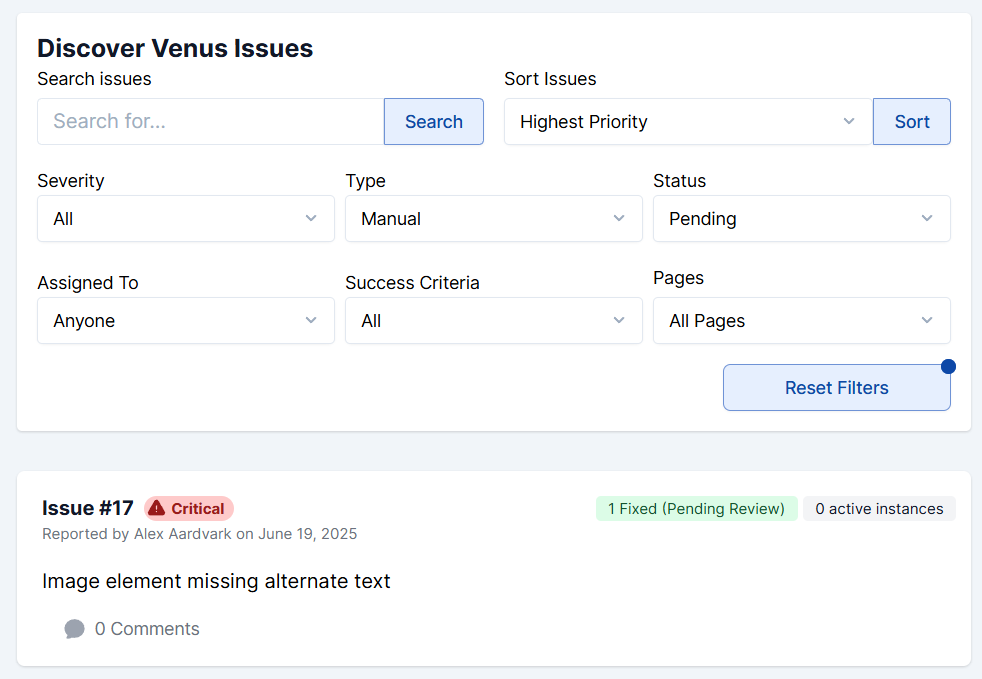

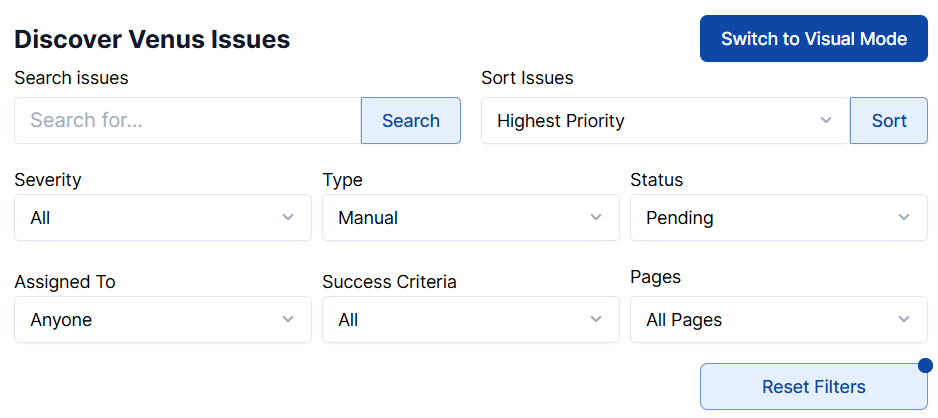

In addition to recording manual issues, testers and developers can also head to the Issues list to go over manual issues that require review. Filtering the issues by:

- Type: Manual

- Status: Pending Review

If a fix meets WCAG standards, the Tester can mark the issue as Resolved.

AAArdvark Features for Manual Testing

AAArdvark readily provides features to streamline the manual testing process with some key capabilities for accessibility testing and remediation.

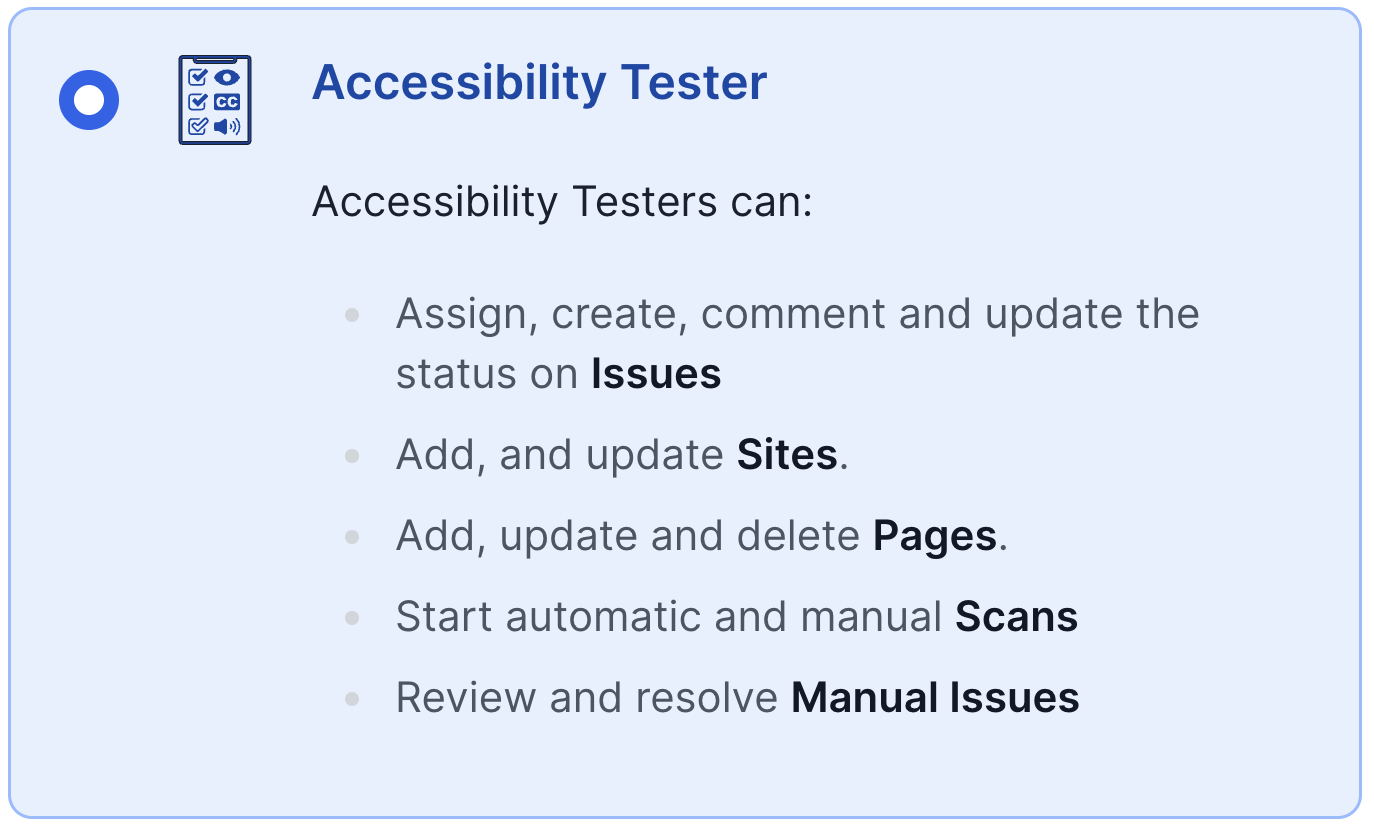

Accessibility Tester Role

Assigning this role gives users the ability to record issues, update status, assign issues, run scans, and resolve manual issues. Accessibility Testers also get notifications when developers mark an issue as fixed and can confirm the resolution.

Accessibility Testers can:

- Assign, create, comment and update the status on Issues

- Add, and update Sites.

- Add, update and delete Pages.

- Start automatic and manual Scans

- Review and resolve Manual Issues

Creating Manual Issues via Visual Mode

Visual Mode simplifies spotting and documenting issues without spreadsheets or scattered notes. Built-in failures are mapped to solutions and resources, and pinpoint markers show exactly where the issue occurs.

Reviewing Manual Issues and Addressing Them

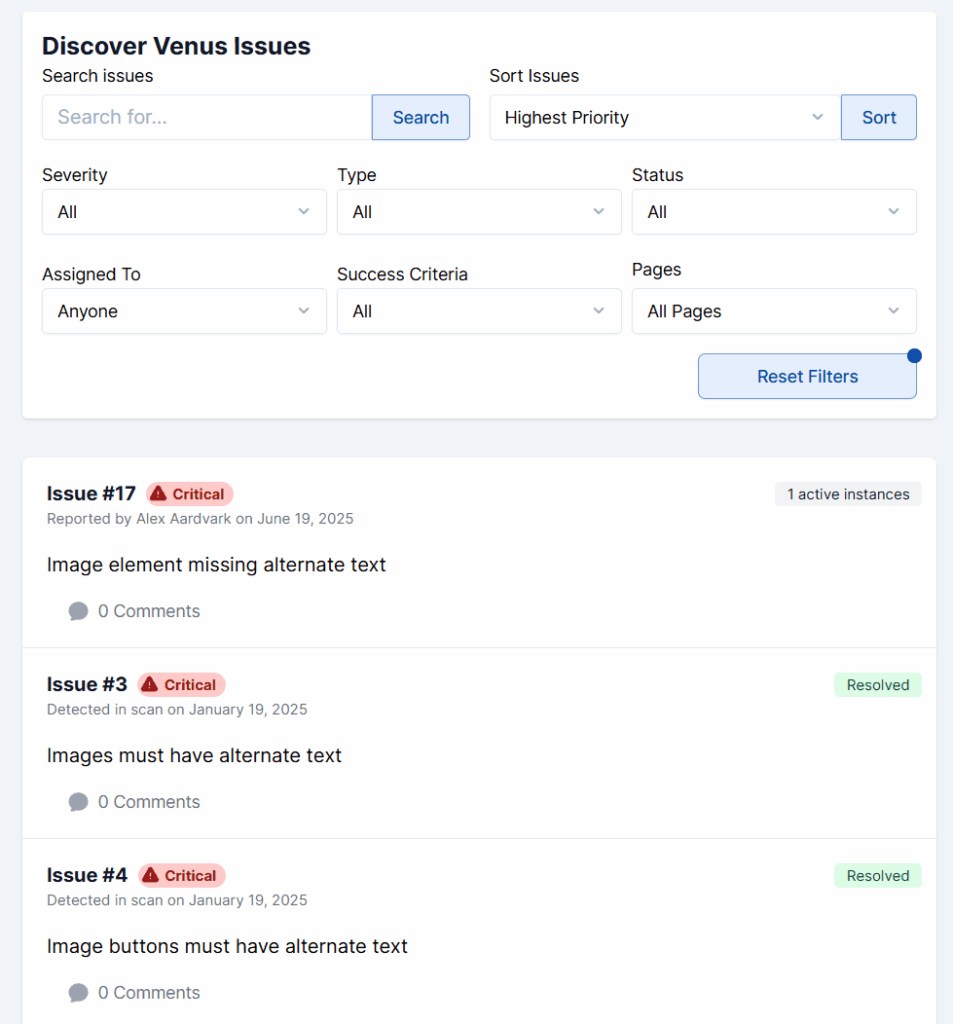

Once manual issues are recorded, they will appear in the Issues list alongside automated issues.

After a Developer addresses an issue, flag it as Pending Review. Accessibility Testers verify fixes, then mark the issue as Resolved.

Filters for Issues

Filters let Testers and Developers sort by priority, severity, type, status, assignment, success criteria, or page.

For manual reviews, filter by:

- Type: Manual

- Status: Pending Review

This makes it easy to track progress and confirm when issues are resolved.