1.2.4 Captions (Live)

Live videos that include audio must be captioned.

What is it?

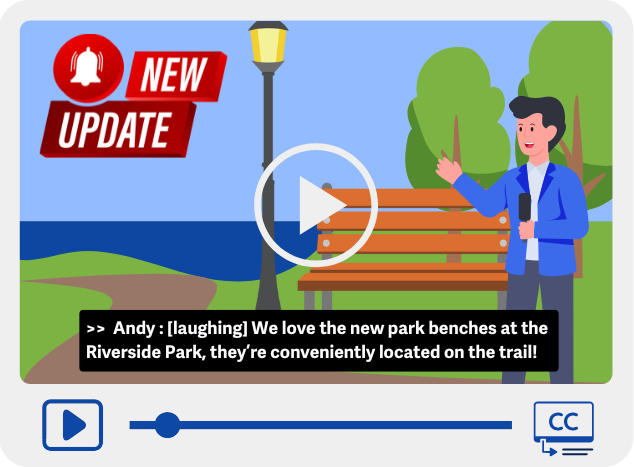

Live videos streamed on web pages should include synced captions. The captions should include info about all the sounds like dialogue, human noises (like laughing, snoring, etc.), details on who’s speaking, and any other key sounds like background music or nature sounds.

The goal is to give a text option for live media so people who are deaf or hard of hearing can follow along with live content too.

Why does it matter?

Without captions for live video, users who need text to understand visual content might find it hard to follow or see the video as not useful. Captions share both talking and other key sound info that help users get the context of what’s happening.

Visuals from a video tell half of the story, but the sounds also play an important role in communicating a message. For example, a live news report video could be covering a story on new benches at a local park, the video may show the reporter sitting on the benches, but without any dialogue the user wouldn’t know where the benches or the park are located and if it affects them.

Who is affected?

People who are deaf. People with limited hearing. People with auditory processing difficulties.

People who are deaf or hard of hearing depend on captions or other text options to follow visual content. Without text, live video becomes confusing or useless, and can be very frustrating for important live content like news broadcasts, webinars, sports events, conferences, or lectures.

People with auditory processing difficulties can read the captions to better understand the content, giving them an alternative for when they can’t interpret spoken words or other sounds.

How to implement 1.2.4

This section offers a simplified explanation and examples to help you get started. For complete guidance, always refer to the official WCAG documentation.

Generating Captions for Live Content

Live video can get synced captions through the use of real-time caption-generating technology or services. These services usually involve a trained captioner watching and listening to the live content and typing out what they hear.

Real-time captions for live videos aren’t perfect since there’s not enough time to fix mistakes, and captioners don’t have a chance to re-listen to sounds for accuracy, especially when sounds or talking happen quickly.

Open or Closed Captions

The live captions can be added to videos as either open or closed captions.

Open captions are always visible on the video, with the text showing in real time, which is great when you want everyone to see the captions. Closed captions, on the other hand, can be turned on or off by the viewer and are sent as a separate stream of data.

Conclusion

Providing synced captions for live videos is key to making real-time content accessible for people who are deaf or hard of hearing. Captions not only show spoken words but also important non-verbal sounds, helping everyone understand the full context of the video. While real-time captioning has some accuracy challenges, it’s a vital step in making content inclusive.